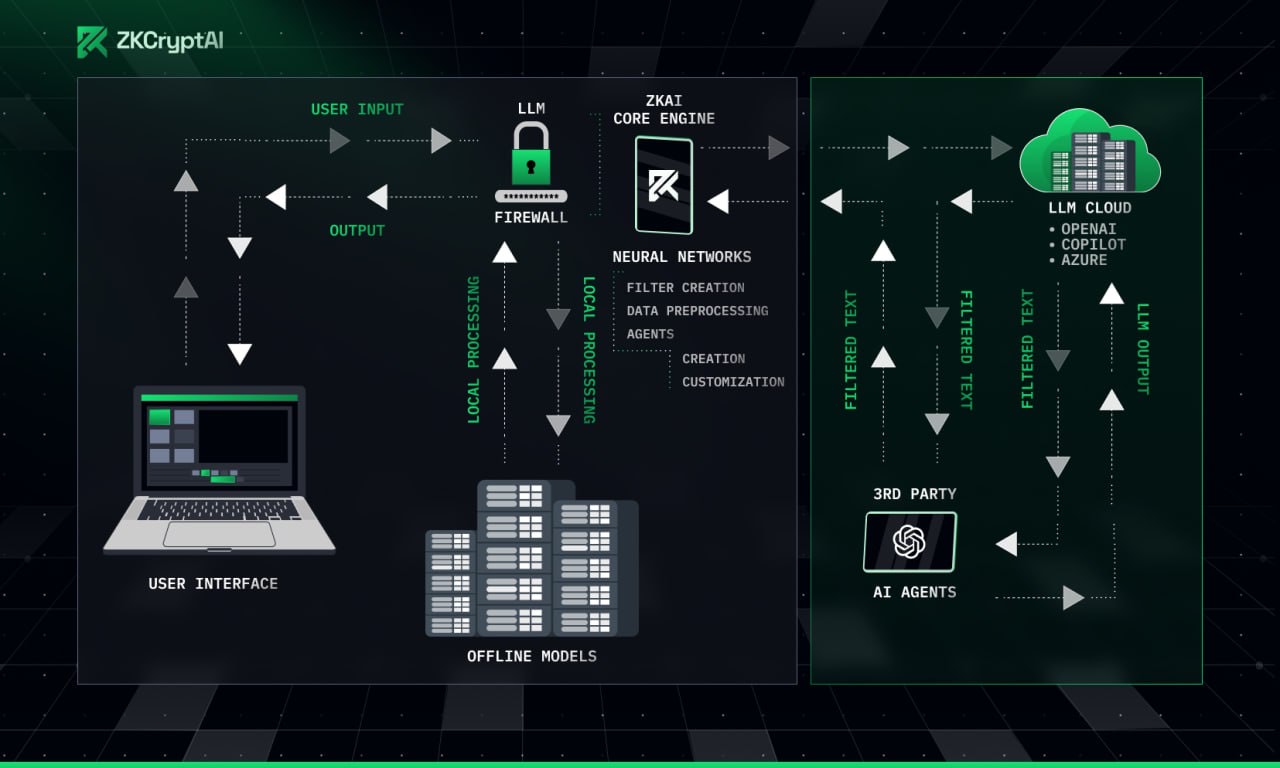

The LLM Firewall operates within a decentralized architecture designed to ensure that user data is handled securely at every stage of interaction with AI models.

The system consists of the following components:

- User Interface (UI): The front-end where users interact with the firewall to

create agents, set policies, and monitor activity. - ZK Core Engine: The core processing unit that handles data filtering, entity recognition, and interaction with both cloud and offline AI models.

- Filter Creation: Creates customizable filters that define which data

should be processed and which should be protected. - Data Preprocessing: Removes sensitive information and apply the

necessary filters before the data reaches the LLMs. - Customizable Agents: Create agents based on specific needs, such as offline and online models, work environment, and custom filters.

- Filter Creation: Creates customizable filters that define which data

Previous